Millions have already used Claude to do far more than chat.

After a quiet period of experimentation, Anthropic has officially opened the doors for anyone to build and share fully interactive AI applications with Claude, no coding knowhow required.

In recent months, users have generated more than 500 million creations through the platform. These range from language study games and digital tutors to data tools that answer spreadsheet questions in real time.

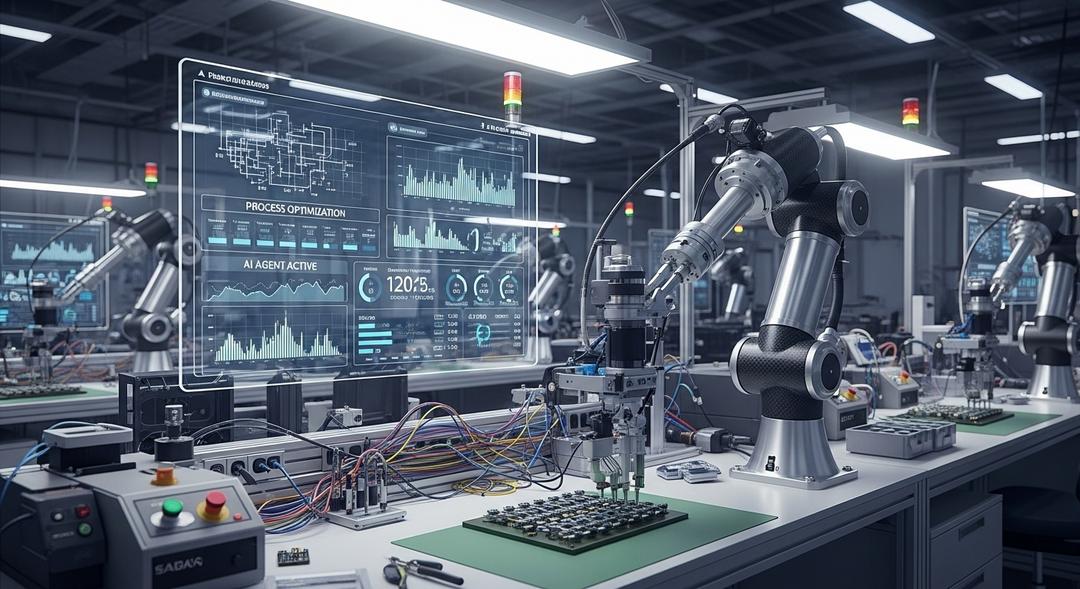

The key change now is intelligence baked into every creation. Apps built with Claude gain the ability to interpret input and adjust output instantly, turning what was once cut-and-paste into entirely living software.

OpenAI has tried to outpace the field with features like Canvas, which allow for editing text side by side. Anthropic, however, shifts focus to let users share ready-made apps—positioning Claude as a springboard for full-blown problem solving tools.

Traditional chatbot exchanges stick to single question-and-answer rounds. Claude’s new “artifacts” let users create their own space where the AI’s output can be used, modified, and distributed as a working app to others.

One executive at Anthropic said, “Think about it like moving from making a set of flashcards to building a flashcard app.” The result is an experience where anyone can make tools for classrooms, offices, or personal productivity with just a few typed requests.

Rethinking Who Builds Software

Early testers have already come up with story-driven games, tutoring tools that adapt to student answers, and analyzers that serve up insights from uploaded documents. These creations require no developer background, but invite creativity from a wide pool.

Revenue strategy centers around free access at first, drawing crowds to experience the thrill of building. As people seek more powerful features, they can move up to paid subscriptions.

While other marketplaces try to let creators cash in, Anthropic’s model is all about building a vibrant ecosystem where sharing comes first. By giving away the platform’s core functions, the company hopes its users become their best advertisements.

But as AI hands over its toolkit to the masses, content moderation becomes more urgent. Anthropic confirms that protections are already in place: built-in AI guardrails review new apps, all shared material must follow strict standards, and users can submit questionable content for investigation.

“Implementing multi-layered protections is a priority,” says the company’s updated policy, which now includes both live and delayed checks as well as in-depth pre-launch reviews.

Industry, meanwhile, is grappling with the question of whether this democratization means programmers will soon be outnumbered. Studies from firms like Gartner and Forrester point to a huge trend: most new apps now come from nontechnical builders, and companies are saving millions by skipping traditional hires.

Even so, Anthropic maintains that full featured, enterprise-grade projects still rely on experienced developers for scalability and security. The real skill shift underway is toward collaboration between human experts and AI assistants, rather than outright replacement.

This move is not just technical evolution—it’s a chess match, with companies like Anthropic and OpenAI fiercely competing for which interface becomes everyone’s go-to tool for building tomorrow’s digital world.