Cybercriminals have started using an artificial intelligence product from Vercel, to whip up fake login portals that look almost exactly like the real thing. Okta researchers Houssem Eddine Bordjiba and Paula De la Hoz spotted this shift, describing it as a leap in how criminals are using generative AI to upgrade their phishing tactics.

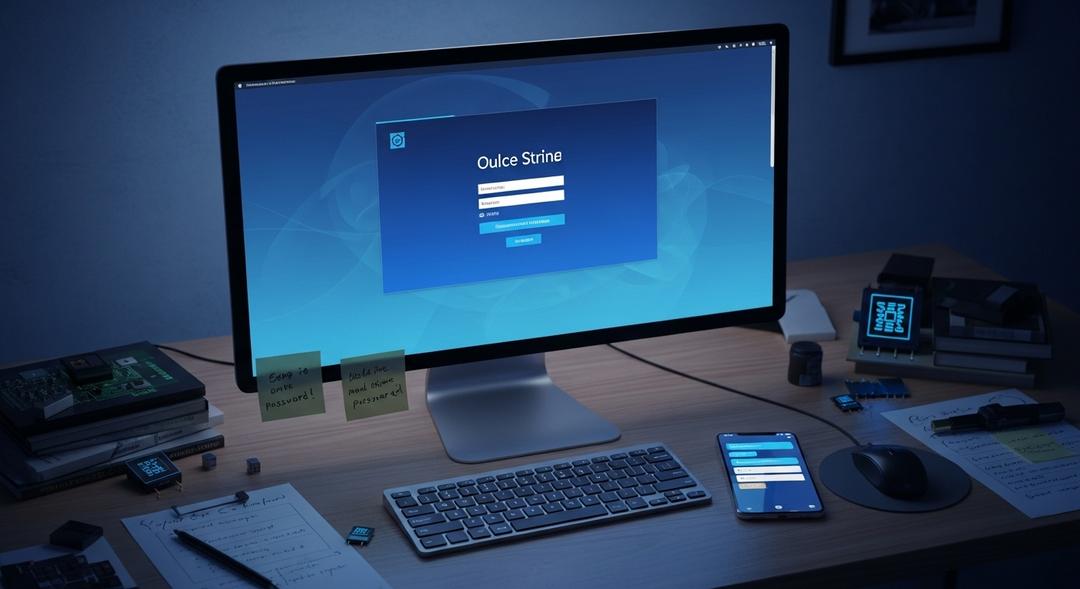

The technology behind v0 is straightforward. It lets anyone create functional web pages with plain language rather than lines of code, which means attackers no longer need to know how to program at all. The results are fake company pages, ready to steal credentials, that can be produced faster and on a much larger scale.

Even company logos find their way onto Vercel’s infrastructure, as scammers seek to exploit the trust associated with this established developer platform. One Okta customer found themselves directly targeted, prompting a swift disclosure to Vercel, which promptly shut down the offending sites.

The alarming ease of tools like v0, and similar projects found on platforms such as GitHub, marks a clear turn away from the painstaking setup phishing once required. Enterprising criminals now just type what they need, click submit, and watch as the AI does the rest.

Generative AI and Cybercrime’s New Reality

“The use of a platform like Vercel’s v0.dev allows emerging threat actors to rapidly produce high quality, deceptive phishing pages, increasing the speed and scale of their operations,” said Okta’s threat intelligence team.

Beyond fake web pages, cybercriminals are also turning to large language models that operate without filters or safeguards. Models like WhiteRabbitNeo boldly advertise their lack of constraints and are being engineered specifically for the underworld to automate tasks in ways that legitimate AI would not allow.

Cisco Talos researcher Jaeson Schultz put it this way: “Uncensored LLMs are unaligned models that operate without the constraints of guardrails. These systems happily generate sensitive, controversial, or potentially harmful output in response to user prompts.”

The entire landscape of phishing is shifting. In addition to fake emails and spoofed logins, there have been incidents involving cloned voices and even fraudulent videos, all powered by AI. These tools aren’t just helping adversaries fool individuals—they are automating deception, creating sprawling networks designed to trick anyone in their path.