A new artificial intelligence model is stepping up to tackle digital tasks that once demanded hands-on attention.

Google’s latest release, the Gemini 2.5 Computer Use model, is now available through its API, giving developers a powerful tool for building agents that interact with software just as a user would.

Handling jobs that need more than a simple script, the model can fill out forms, click on buttons, scroll through content, and even sign in to web platforms. This boost goes beyond plain programming and bridges the gap between automated tasks and real-world browsers or apps. The company says it delivers faster and more reliable results on performance tests for web and mobile tasks compared to other options on the market.

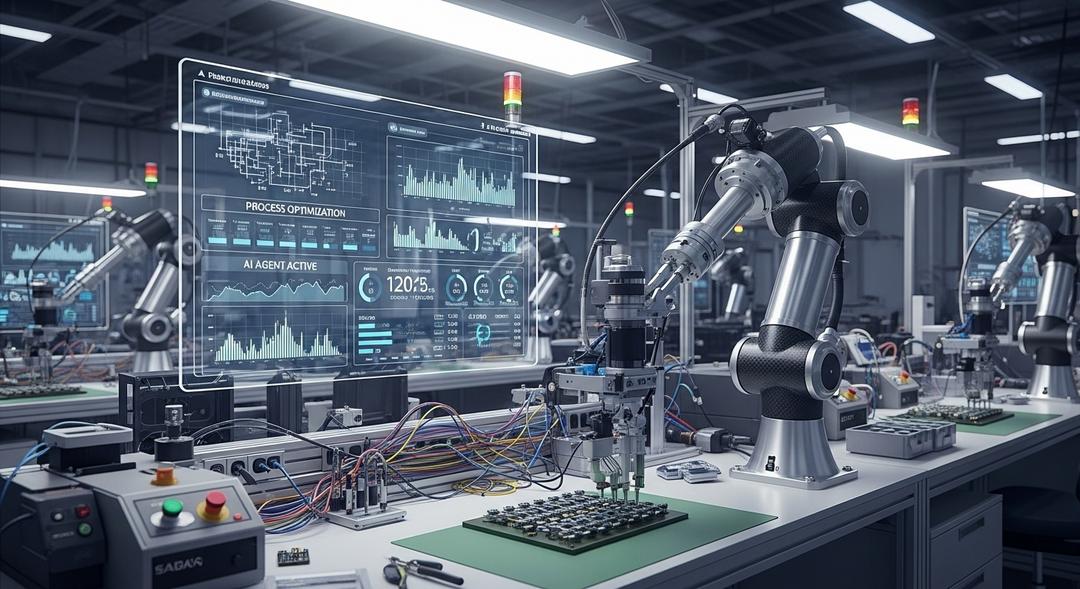

This new tool runs on a loop: the agent receives a user request, a snapshot of the current app or webpage, and a record of previous actions. Developers can zero in on exactly which UI functions the system should use, or add custom ones if their situation calls for it. The model then reviews the environment and picks the right move, such as typing into a field or clicking a button, before the cycle repeats with a refreshed screenshot.

Safety and Early Results

Of course, letting artificial intelligence agents roam free in user interfaces comes with serious responsibility. To guard against misuse, Google designed the Gemini 2.5 Computer Use model with built-in safety elements. Every action it proposes runs through an external check before it actually takes place. Developers can insist the system double-checks with humans before doing higher risk work, such as transactions or system changes.

The company urges anyone using these tools to test thoroughly before sending their new agents into the wider world. “The only way to build agents that will benefit everyone is to be responsible from the start,” a Google spokesperson explained.

Testers have already been putting the model through its paces. Some Google teams use it for quality assurance work, harnessing the tool for automated interface checks that speed up development in ways that would be impossible with traditional testing.

Others, early access developers, see strong results in areas like workflow automation and digital assistants, with the system able to arrange appointments, categorize virtual sticky notes, and carry out rapid multi-step tasks that would tax a human’s patience.

Developers interested in exploring these capabilities can find the Computer Use tool in Google AI Studio and Vertex AI. The hope is that with careful implementation, this new class of digital agent will not just handle repetitive chores, but open up the next wave of smarter user experiences.